Blogging Trends for Business Owners in 2016

Blogging has evolved greatly since its emergence in the 90s. What started simply as a lifecasting outlet for individual content creators has progressively matured into an integral part of every business’ digital strategy. Blogging is no longer limited to leisure and lifestyle. It is no longer exclusive to foodie writers, exercise gurus, and relationship connoisseurs. Today, blogging means business.

Over the years, blogging has blossomed into a full-time profession, a fruitful digital marketing tactic, and an essential measure for organizations looking to establish their authority online. For businesses especially, blogging has become a standard in the online landscape.

If you want to make it, you have to create it. Today, nearly every successful online business is employing content writing in some way. According to the 2016 B2B Content Marketing Report, 81 percent of businesses now consider blogging to be a core component of their content marketing strategy.

From large companies to small businesses, CEOs to subject matter experts, mom-and-pop shops to your very own mother-in-law—It seems that virtually everyone is now taking advantage of what blogging has to offer. How will you keep up, stand out, and rise above the competition?

Let’s take a look at four blogging trends that will change how you approach business blogging in 2016.

1.) Size Now Matters

More people are getting their content online now than ever before, making it more difficult for businesses to truly thrive online. Today, the web is flooding with futile blog posts, articles, and ‘click-bait’ to reel in readers. The question of 2016 has become: how can we, as businesses, cut through the noise and get our content in front of the right eyes? How can we deliver quality content to new prospects?

The answer lies right in the question. To get our blogs in front of new eyes, we must take the time to create and deliver great content. According to marketing expert Ann Hadley, this year will be the year of highly relevant, high-quality, at-length material. She notes in an Orbit Media report,

“To thrive in an over-saturated content world, you’ll need to constantly write or produce (and syndicate) content with depth. Longer posts, more substantive content that people find useful and inspired.”

It appears as though long-form will become the new blogging norm. A couple hundred words will no longer appear as useful as an extensive, data-driven, thousand-word post. While the average blog today is about 900 words, 1 in 10 writers are consistently writing 1,500+ word posts. This year, size will matter.

2.) Quality Over Quantity

As long-form blogs start to take the stage, exceedingly succinct blogs will be pushed to the backburner. In the case of quantity vs. quality for blog writing in 2016, it seems that less is actually more.

Many businesses make the mistake of creating content for content’s sake. The consistent focus on churning out as many blogs as possible, however, can actually diminish the value of the writing over time. The fact is, readers are looking for quality content supported by stats (the more primary research you have, the better). They are looking for answers, for insights, for fresh ideas. They are looking for solutions. They are looking to be engaged.

3.) Engagement Will Become Your New Best Friend

In the past, content creation was largely about generating traffic. While organic traffic, page views, and unique visitors are all still important metrics, business bloggers will analyze success a bit differently in 2016. This year, the biggest measurement to pay attention to will be your audience’s engagement rate.

Are you able to attract readers with your content? More importantly, are you able to keep them on and engaged with your website? Are you giving your audience something to consider, something to act on, or something to return to in the future? Are readers likely to share your content through social, and get others talking about your brand, as well?

Can you say ‘yes’?

4.) Visual is Now in Focus

Blogging is not solely reliant on writing: how you frame your writing, and how you deliver your information, is vital to your blog posts’ success. In order to engage with your audience on a deeper level today, you must balance your blog text with a good structure and compelling graphics.

Recent research has shown that adding visual elements and graphics to your blog posts can help you generate up to 94 percent more views. It is no wonder why. As long-form blogs become more frequent, so will the need for graphics to break up the post. Text-dense articles can be heavy on the eyes. It is important, therefore, for business writers to balance their information with a multi-image format. If you are discussing a new product, then include multiple real-life, relatable photos to better engage your readers.

Images are not everything, though. This year, you may consider embracing new visually appealing elements, such as video, audio, quotes, and embedded social media, in your blog content. You may consider featuring free downloadable assets, infographics, eBooks, or sharing podcasts and webinars by your internal subject matter experts. Continuously sharing fresh, engaging content will not only break up heavy blog posts, but it will also lead to greater engagement among your readership.

5.) Time to Take Mobile Seriously

On average, people pick up their phones 150 to 200 times per day. That means that, in the United States, there are nearly 30 billion mobile moments in total each day. Still, some businesses have yet to acknowledge the importance of mobile as a part of their online strategy.

To efficiently generate clicks, leads, and sales through your website or blog, you must tailor it to the mobile user experience. Easy-to-read, digestible content, clickable links, and optimized images will make for the most cohesive and dynamic mobile design.

If you are not mobile-friendly, users will know it. Google now labels  websites and blogs that are mobile friendly right there in the search results on your mobile device. If your website is not mobile responsive, you may get left in the dark. Optimizing your website or blog to be mobile-friendly, therefore, must be of high priority in 2016. See if your website is mobile responsive using Google’s Mobile-Friendly test.

websites and blogs that are mobile friendly right there in the search results on your mobile device. If your website is not mobile responsive, you may get left in the dark. Optimizing your website or blog to be mobile-friendly, therefore, must be of high priority in 2016. See if your website is mobile responsive using Google’s Mobile-Friendly test.

Having a blog in place on your business website can help gain traction in the online landscape. Having a blog that is valuable, visually-compelling, and mobile-friendly, however, can help you attract qualified traffic, convert engaged readers, and secure new business online. Don’t fall behind the competition. Make blogging, and these core blogging trends, a priority in your digital marketing strategy this year.

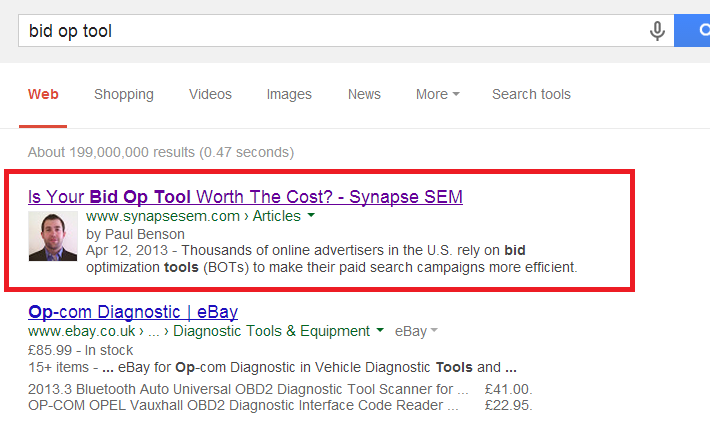

If you’d like to learn more about Synapse SEM, please complete our contact form or call us at 781-591-0752.